Exploring the Best Platforms For Image Generation

A surprising amount of my time of late has been spent on AI imaging - creating images for articles, mainly, but also working with images for video production and slide deck presentations. Since I tend to prefer photographic and near-photographic images for my work, much of my focus has been on the realistic rendering capabilities of these platforms, which I’ll cover here.

I've worked with all three of the primary image generators - HuggingFace's Stable Diffusion, DALL-E 3, and MidJourney, and while I think all three of them have come a LONG way from where they were a year ago, they still have quirks. A little more detail on these:

DALL-E 3 is perhaps my most reliable source for imagery for articles. You can get both cartoonish and realistic renderings, though the realistic side usually still has a somewhat unnatural cast to it. However, DALL-E is also remarkably good at interpreting prompts, and of the three the one most likely to be somewhat tongue-in-cheek with its interpretations, something that I think works well on the editorial side.

MidJourney, especially v6, is the most cinematic of the three, and I've managed to put together some absolutely stunning work when looking for something evocative. The biggest downside to MidJourney is that it is also very literal, it tends to be very conservative when prompting, and the MidJourney team goes out of their way to flag any sort of controversial content. It also has the least amount of post control and modeling capability.

Stable Diffusion is the one I play with the most, primarily for experimenting. I run it locally, so that I have full control over which models and loras (aka filters) that I'm using, and I can run it essentially at no cost (I have both DALL-E and Midjourney accounts that I pay about $40 a month collectively to use). Having said that, Stable Diffusion can take a lot of work and many, many, (many!) iterations to actually pull something useful out of, and the interface (I use Easy Diffusion primarily) can be finnicky and occasionally very buggy. However, the efforts are frequently worth the time spent.

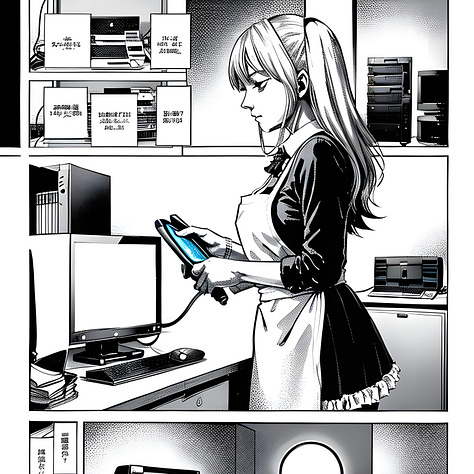

As to techniques, I have found that sometimes it is worth developing a prompt on one platform then trying the same prompt on different platforms to get different “interpretations”. For instance, on DALLE-3, I used the following prompt to generate the French Maid ‘Housekeeping’ picture, originally for an article on digital housekeeping:

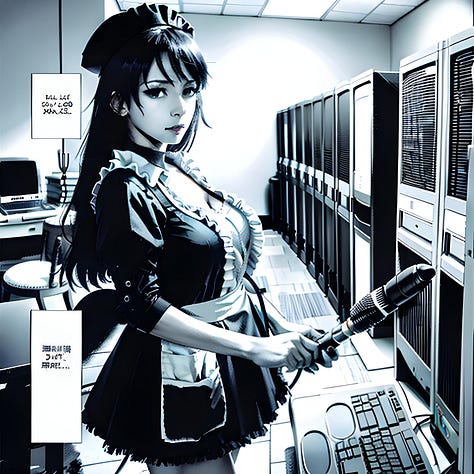

draw a picture of a french maid doing housekeeping of computer systemsTaking the same prompt into MidJourney (and making a slight adjustment to get a more realistic output), I ended up with the following:

which while cinematic, didn’t quite give the impression I was looking for (I really lucked out with the DALLE-3 image, which did). So I went back to the original dialog in ChatGPT and retrieved the image that ChatGPT ended up sending to DALLE-3 (click on the image, then the information icon on the upper right hand corner next to the download button). This was the new prompt:

A French maid, dressed in a traditional black and white uniform with a frilly apron, is busy doing housekeeping of computer systems. She is dusting and organizing a series of large, modern servers and computer equipment, with various cables and monitors around her. The room looks like a high-tech data center, with rows of servers and blinking lights. The maid is focused on her task, holding a small duster in one hand and a can of compressed air in the other, carefully cleaning the equipment.Taking this back to MidJourney, I had all kinds of interesting responses, though none of them anywhere near what I’d been hoping for:

The pink one in particular looks like it was from one of the odder Terry Gilliam movies. Cinematic shots, admittedly, and for free associating (or for a longer form video) all very intriguing, but not quite hitting the spirit of the original.

I also took this back to Stable Diffusion, with an SDXL 2 model and no loras, and tried the same prompt:

These were all okay, but ironically a little too photographic - they just didn’t catch the same playfulness as the original DALLE-3 image.

However, I also used the same prompt along with the image2image facility starting from the initial image (initial image left, SD image right), with the CFG (how closely the image matched the original) set fairly low. The result was interesting, to say the least:

The latter is more “realistic”, though notice also that the buckets have been transformed into robot-looking things and her high-heeled shoes have been replaced with pumps. Again, the subtleties in the DALLE-3 image seem to win out over the more photographic image on the right, but depending upon the image and the model, this could go either way.

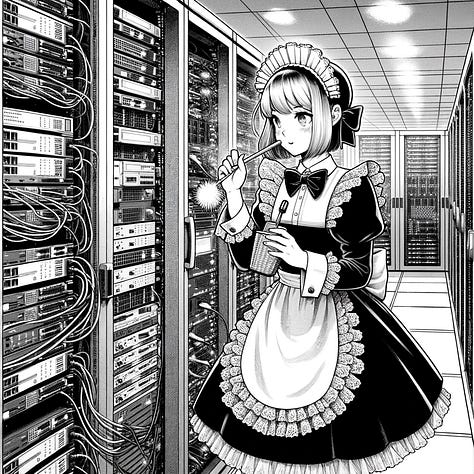

It is also possible to go with different styles, such as line art. I used a different prompt with both DALLE-3 and Stable Diffusion:

User

A manga comic book page in black and white of a French maid, dressed in a traditional black and white uniform with a frilly apron, is busy doing housekeeping of computer systems. She is dusting and organizing a series of large, modern servers and computer equipment, with various cables and monitors around her. The room looks like a high-tech data center, with rows of servers and blinking lights. The maid is focused on her task, holding a small duster in one hand and a can of compressed air in the other, carefully cleaning the equipment., Manga, Comic BookThe results:

What I found was that to get even to the point of generating the images, it took nearly two hours of configuring and testing, with the guarantee of even more post-work, for the Stable Diffusion versions, while the DALLE-3 image generated in under a minute and didn’t differ dramatically from the original DALLE-3 source. On the other hand, you have somewhat better control over the Stable Diffusion art, and, in general, you can store useful configurations as templates for future work (something that often comes in handy). This especially makes a difference when you’re working with a single character across multiple images, as is typically the case with comic books and videos.

Summary

Given all this, there’s no one clear answer as to which is the best platform for image creation but rather, each offers different strengths and weaknesses:

MidJourney works great for cinematic shots and portraiture but doesn’t respond as well to prompts and is at best, indifferent for non-photographic work.

DALLE-3 can be nearly telepathic with prompts, especially coming from OpenAI, and can produce very high-quality output with surprisingly little work. However, it’s model tends to be lower resolution, and there may be an upper limit to the amount of experimentation you can do due to token limits.

Stable Diffusion is easiest to run locally, is easiest to customize, and can produce high-quality high-resolution renders. On the other hand, it requires a beefy GPU and plenty of disk space (or access to an SD server), and getting good results is still more art than science.

Kurt Cagle is the Editor of Generation AI, and is a practicing ontologist, solutions architect, and AI afficianado. He lives in Bellevue, Washington with his wife and cats.

For more posts and resources on using generative AI tools and techniques, subscribe to Generation AI: